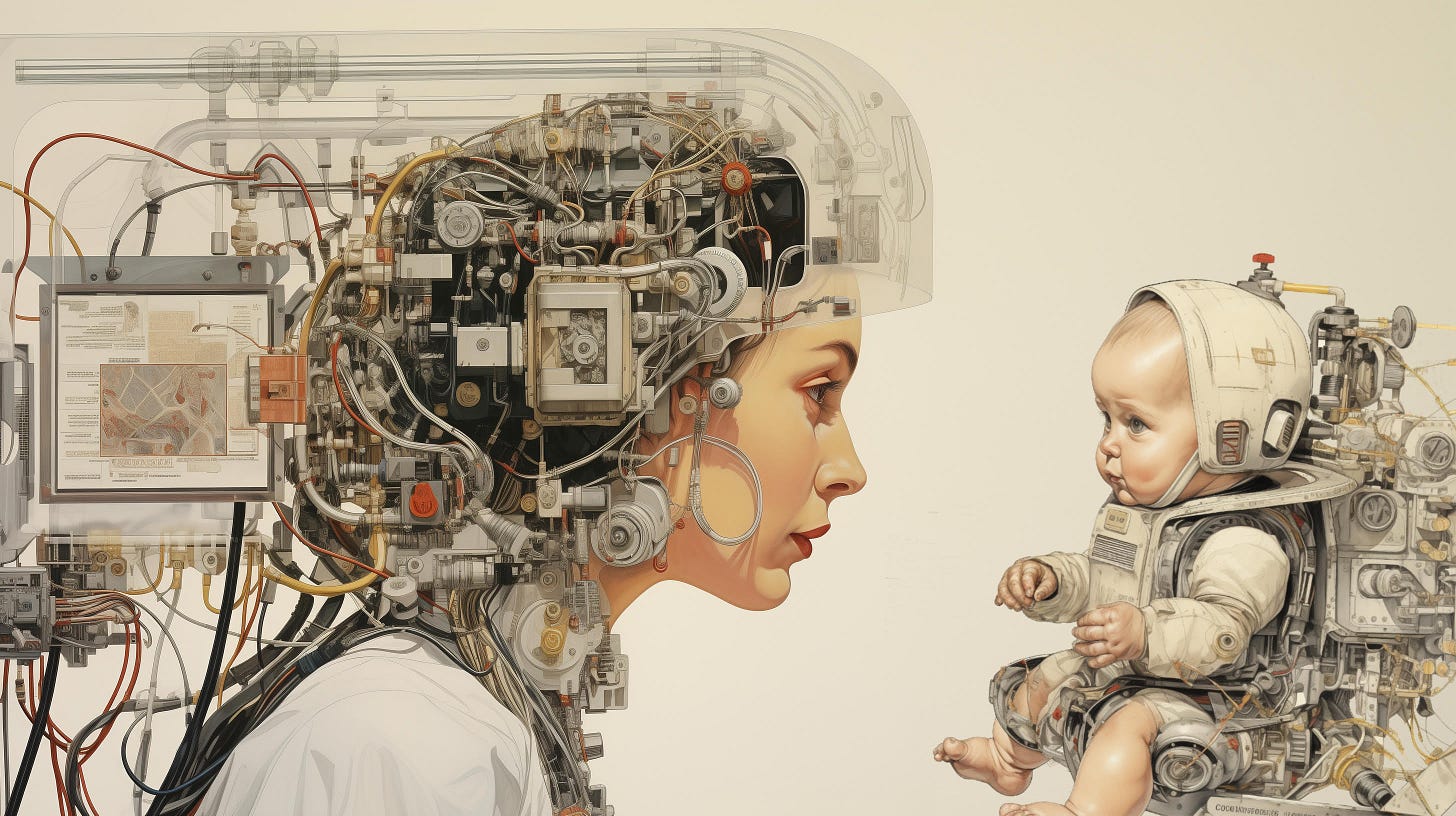

Mind Over Machine

This AI expert says evil humans will be a greater threat than malevolent AI overlords

Now that the tumult over the leadership at OpenAI has subsided, this week the company is celebrating the first anniversary of the commercial release of ChatGPT, the generative AI tool that is at times so effective it has led some to wonder about the possibility that AI may one day become self-aware and superintelligent.

The prevailing narratives surrounding the debate on artificial intelligence (AI) safety and the emergence of artificial general intelligence (AGI) have often, like many things nowadays, been fueled by emotion rather than logic. Pedro Domingos, a professor emeritus of computer science and engineering at the University of Washington, and the author of “The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World,” believes that we should be thinking about AI differently.

Rather than hewing toward the science fiction tropes that depict AI as an evil alien intelligence intent on the enslavement or destruction of the human race, Domingos believes that we have far more control than even some of the leading experts are admitting and that the true fate of AI is humanity’s to fumble.

Earlier this year, I had a chance to speak with Domingos and get his answers to some of the biggest questions related to AI, AGI, the so-called AI alignment problem, and how our near-term collective future may play out. This is just a portion of our discussion, the full version can be heard or seen on The Hundred Year Podcast.

Adario Strange: A good amount of your commentary online pushes back against many of the AI doomsayers as well as the common ideas around The Singularity. Can you unpack that a bit more?

Pedro Domingos: Yes, this notion of The Singularity has been popularized by Ray Kurzweil, but it goes back to Irving John Good, and it's this notion that once machines know how to improve themselves, which is the promise of machine learning, a machine will build a better machine, which will build a meta machine, and machine intelligence will increase exponentially until it leaves us in the dust and it goes on without limit.

There's a sizable tribe of singularity believers out there, [but they don’t] actually intersect that much with real AI researchers. Most AI researchers think the whole notion is silly and embarrassing. And the first basic reason for that is that singularities do not exist in the real world. A singularity is the point at which a function goes to infinity, and infinity in the real universe doesn't exist, and people are always talking about these exponential curves in technology.

Ray Kurzweil’s book “The Singularity Is Near” is one exponential curve after another, and they’re very exciting, but the reality about exponential curves is that they always taper off. It’s physically impossible for them to go on forever. So what you have, which is very well known in technology and other areas, is what are called S-curves. An S-curve is a curve that goes up slowly first, and then quickly, which is when the exponential talk starts, and then it tapers off again. And so what you have at the end of the day is just a change from one level to another. S-curves can be short or long, they can be big or small leaps. AI in particular is going to be a very large S-curve.

AI fears and the call to regulate the future

Strange: I think we all know about the open letter that was published earlier this year asking for a pause on AI. One of the most well-known signers of that document was Elon Musk (founder of xAI). Separately, another letter was recently published by the Center for AI Safety. Among the signees of that letter were Sam Altman, the CEO of OpenAI, and Geoffrey Hinton, the computer scientist and former Google employee who some refer to as “the godfather of AI.” He says he left Google because he was concerned about AI safety.

So they published this letter, and it consisted of just one sentence, which read, “Mitigating the risk of extinction from AI should be a global priority alongside other societal scale risks such as pandemics and nuclear war.” What is your gut reaction when you hear that statement?

Domingos: I know Geoffrey Hinton very well. He’s great. He’s a fantastic researcher. He's someone who, up until [several] months ago, by his own admission, couldn't care less about any of this. I remember something he said to me years ago [paraphrasing], “I know AI in the end is going to make the world a worse place.” Because he's a socialist, and he thinks capitalism is bad, and companies will make bad use of it. But he doesn't care, he’s doing research on it, because what he wants to do is understand how the brain works, and how this all pans out in the end. He doesn't care, he’s a federalist, that’s not his problem. Which is a little shocking, but there's a fair number of scientists who are like that.

What seems to have happened in the last few months, which actually surprised me, is that Geoff seems to have been fooled, like a lot of people, by the apparent signs of intelligence that things like ChatGPT show. The example he likes to give is that it explained a joke to him, and if you know how to explain a joke, you must be intelligent. Well, no, Geoff. Joke explanations are common on the web. There are only a few different kinds of jokes. What ChatGPT is doing is matching different explanations to the joke that you're giving it. Often, the first one isn't right. But then, after a few tries, it gets the right one. What it's doing is intelligent, to some degree. It’s not completely trivial. But ChatGPT doesn't understand jokes.

The chances of AI being an existential risk are not zero, but they’re nowhere near the level of pandemics or nuclear war. It's just irresponsible to put AI on that level.

So I think Geoff got worried. Often scientists are like this, brilliant in some regards, very naive in others. He just woke up to this whole area of AI safety, and I think he's been taken in by a lot of the alarmism. Having said that, I think people have somewhat misrepresented his attitudes, and he has pushed back against this [saying things like], I'm not saying doom is upon us, I'm just saying I'm concerned about this, and we need to worry about it.

I think there's a spectrum of AI alarmism. For example, Yoshua Bengio, another co-founder of deep learning, is further on that spectrum. Yann LeCun (chief AI scientist at Meta) is very much on the skeptical side like I am. So Geoff has moved on that spectrum, but not as dramatically as the media is making it [seem].

I think these letters are extremely counterproductive. I know a lot of the people who signed [the first letter]. One after another, I heard them say [things like] this letter has problems, [and that they] don't really agree with half of it, but it was a good tool to raise awareness. And hey, it worked, right? So they're very proud of their publicity stunt. What I think they shouldn't be proud of is that they're destroying their credibility in the process. Next time, when they cry wolf [about AI safety], no one will ]believe them. They're free to destroy their own credibility, but they're also destroying the credibility of the scientific community.

Counter to what a scientist should be, they’re extremely short-sighted in this. I think the genesis of the second letter was more [designed to] boil things down to something that we all can agree with. So a lot of the nonsense got cut out, thank god. I still have a big problem with the letter. The chances of AI being an existential risk are not zero, but they’re nowhere near the level of pandemics or nuclear war. It's just irresponsible to put AI on that level. It’s bad for AI, and it's bad for those risks that we should be paying more attention to.

The question of control as AGI looms on the horizon

Strange: Let’s broach the topic of alignment, the idea is that we need to get these AI mechanisms to align with us in terms of our human goals, our needs, and what's best for us, versus if it reaches a point where AI become self-possessed, and is mostly interested in its own goals and needs. Is that the right way to frame it? What are your thoughts on the idea of alignment?

Domingos: It's the wrong way to frame it. My problem with alignment starts with the concept of alignment as applied to AIs. AIs don’t need to be aligned with humans. That concept is not relevant here, and here's why: Every AI and every machine learning system is controlled by an objective function. The objective function is the thing that it's trying to optimize. For example, in social media, notoriously, it’s engagement. And in online targeted ads, it’s clickthrough rate. It could just be accuracy. For example, large language models (LLMs) are trained to correctly predict the next word that you're going to say or write, and the accuracy in that is the objective function.

So their whole power—complexity, reams of data, thousands of GPUs—is all being used to serve that objective function. And this is a very important thing that everybody needs to know. The analogy I use for this is a car. You don't need to understand the mechanics of the engine, you need to understand how to use the steering wheel and the pedals. The steering wheel of a machine learning algorithm is the objective function. We don't need to align AI. Aligning is what you do between people who have different values, or maybe a company and its employees.

In AI, what we have to do is properly design the objective function. The AI, no matter how much it learns—and this is a crucial point—does not have the freedom to override the objective function. It can set subgoals from the menu that we provide, which is also important, but those subgoals can only ever be used in service of the overall goal. So if we do the job properly of setting the objective function, the alignment problem goes away, there is no such thing as the alignment problem. So I think we should be talking more about objective function design and less about alignment.

Science fiction has already given us the answer to one major AI problem

Strange: When AI researchers speak about alignment. It seems they're mostly speaking about the future. It sounds like you're speaking about the present and near future. Can you more so speak to the [far] future? Specifically, regarding the assumption that these AI systems won't remain static. It seems like what you're saying is that you don't feel that AI systems will evolve to the point where they achieve some sort of artificial general intelligence (AGI) and become self-aware to some degree. Is that your contention, or not?

Domingos: No, I'm glad you asked that question. I think the better distinction here is not between near-term and long-term AI. AI, the way they are built today and have been since the beginning, have these objective functions. It's actually a remarkably constant thing in machine learning that all sorts of things vary, but that basic structure doesn’t.

Now we can look at two scenarios. One is, does that basic structure continue? In which case, your AIs can get incredibly powerful—as powerful as you want to make them. But there is no alignment problem. There are nuances to this, but the AGI can and probably will be achieved in this context with this type of system. In which case, again, it can be very general, but not really need alignment.

We can also talk about, well, what if there's some other model of AI that then prevails over this one? When you talk about AI evolving, it's important to distinguish between AI evolving because we, the AI engineers, are changing how we design it, and AI evolving because it's learning from data. If the AI is evolving because it's learning from data, it hasn't changed that basic paradigm. If we humans design AIs to be evil, they will be evil. There is no doubt that humans, being the way we are, someone will be irresponsible and try to design an AI to take over the world, and kill humans. But then the problem there, I submit, is not with the AI, it's with those people.

We need a Turing police [like] in William Gibson's novel to catch them and put them in jail. So you can certainly design AIs to do all sorts of evil things, but the problem is with the people who are doing the design. This whole notion that the AIs will spontaneously turn evil as they evolve is what I find very far-fetched. I see no way in which that would happen in either of these scenarios.